While computer science only emerged as a distinct academic discipline in 1950s, the key ideas behind it date back to centuries before the first computer science degree was awarded. What were the key developments in computer science until today, and what trends are shaping up to be the most prominent in the future? This article provides a brief overview of the history of computer science and computer science domains that researchers are likely to focus in the next decade.

History of computer science

Before 1900

In its essence, computer science deals with the use of machines that facilitate computation. Mechanical devices that help calculation have been in use for thousands of years, starting from the abacus which has existed for at least 4,000 years. Over the centuries, people have developed sophisticated analog designs such as the Antikythera mechanism used in ancient Greece to aid computation. Notable inventions from early modern Europe include Napier’s rods (c. 1610) that simplified the task of multiplication, Pascal’s mechanical adding machine (1641), and mechanical calculator of Leibniz (1694).

The general idea permeating computer science, namely representing complicated patterns and problems with the use of automated machines, was embraced by Charles Babbage (1791-1871) who designed two devices: the Difference Engine and the Analytical Engine. Ada Lovelace (1815-1827), who worked on the Analytical Engine and is viewed as the first computer programmer, recognised the potential of using such machines beyond simple calculations.

Hollerith’s tabulating machine designed to help tabulate the 1890 US census. From https://www.ibm.com/ibm/history/ibm100/us/en/icons/tabulator/.

While Babbage’s devices did not yield satisfactory results, later inventions such as Jevons’s machine (1869) and Hollerith’s (1889) tabulating machine successfully solved complicated problems that would be solved much more slowly without machines’ aid. With engineering seeing major advances, the ideas behind these early designs will continue to influence researchers for decades.

1900-1950

Early on, engineers and inventors were restricted to building special-purpose mechanical devices. For example, Carissan’s factoring machine (1919) was designed to test integers for primality and factor composite integers. In 1928, David Hilbert, an influential German mathematician, posed several questions on the foundations of mathematics, including the so-called Entscheidungsproblem (the ‘decision problem’) which asked if mathematics was decidable – that is, is there a mechanical method for deciding the truth of a mathematical statement? The decision problem was answered in 1936 by Alan Turing who constructed a formal model of a computer, the so-called Turing machine.

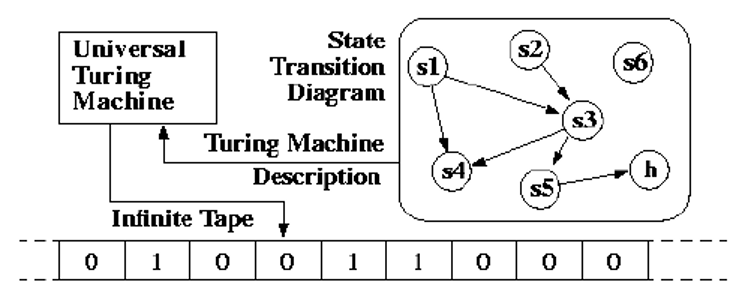

Universal Turing machine (UTM). From https://web.mit.edu/manoli/turing/www/turing.html

The construction demonstrated that there were problems that a machine would not be able to solve, such as the "halting problem" of showing if a program would finish running for a given input.

In 1940s, the development of computer science was heavily influenced by World War II. Ballistics computations accelerated the development of general-purpose electronic digital computers such as Harvard Mark I (1944), an electromechanical computer in part inspired by Babbage’s Analytical Engine, and programmable ENIAC (1945), initially designed by Mauchly and Eckert for artillery calculations. Another wartime influence on computer science took the form of computational projects related to military code-breaking. The Bombe electromechanical device and the Colossus computer were used by Alan Turing and other researchers in the cryptanalysis of the German army’s Lorenz cipher and the Enigma device.

1950-1980

For the following years, the evolution of computer science was guided by engineering inventions such as transistors, magnetic core memory, and integrated circuits. However, many ideas from earlier understanding of computer science are still used in some form in modern computers. For example, ENIAC’s successor, EDVAC (1949), included von Neumann’s mergesort routine. While most of computers were not reprogrammable, Wilkes’s EDSAC (1949) was among the first stored-program digital computers. The invention of a compiler (1951) and languages such as FORTRAN (1957) and LISP (1958) was particularly influential.

Aside from breakthroughs in engineering, major advances in computer science arose from theoretical considerations. Dijkstra developed several efficient algorithms for working with graphs such as finding shortest paths or determining the minimum spanning tree (1959). One of the first efforts in the area of artificial intelligence was the Turing Test (1950) which defined the concepts of "thinking" or "consciousness" through a game where an entity responds to the tester's queries. Major advances were seen in operating systems, new programming languages, and theoretical frameworks such as theory of formal languages and automata theory. By 1960s, such ideas spanned a wide range of topics and domains, and computer science came into its own as a separate discipline with dedicated departments and degree programmes.

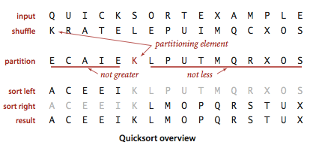

One of the key applications of computer science in that period was proving correctness of programs. Hoare recognised the importance of formal methods for verifying program correctness and developed a formal system, namely the Hoare logic (1969), for reasoning about computer programs. Hoare also invested the Quicksort routine (1961) that is still commonly used as a sorting algorithm to this day.

Quicksort overview. From https://algs4.cs.princeton.edu/23quicksort/.

The state of computer science in 1970s is reflected in Knuth’s ‘The Art of Computer Programming’ (1968-1973), a comprehensive monograph on the programming and analysis of algorithms. Major work was done on the theory of databases, computational complexity and NP-completeness, and public-key cryptography. Development in the theory of algorithms and computation was accompanied by major engineering inventions such microprocessors (1969), supercomputers (1976), and RISC architecture (1980), as well as advances in programming environments such as Pascal (1970) and Ada (1980) programming languages or the Unix operating system (1973).

1980-2000

The key developments that influenced the evolution of computer science during this period are the rise of the personal computer and the invention of the Internet. While the first personal computers date back to early 1970s, home computers rapidly rose in popularity in 1980s. As the industry became more competitive with such firms as Apple, IBM, and Commodore heavily investing in the home computer market, the role of computer science in improving software and architecture also increased with such problems as designing integer arithmetic and floating point units, optimising chip instruction sets, and unifying instruction and data cache.

Computer science laid theoretical foundations for Internet communications through research on information theory by 1940s. In particular, Claude Shannon (1916-2001) introduced the notions of information entropy and described the trade-offs in telecommunications in the presence of noise (1948). The packet switching method (1965) was developed which grouped data into packets and made routing decisions per-packet. Packet switching served as a basis for several networks including the NPL network, ARPANET, and CYCLADES, as well as the X.25 protocol.

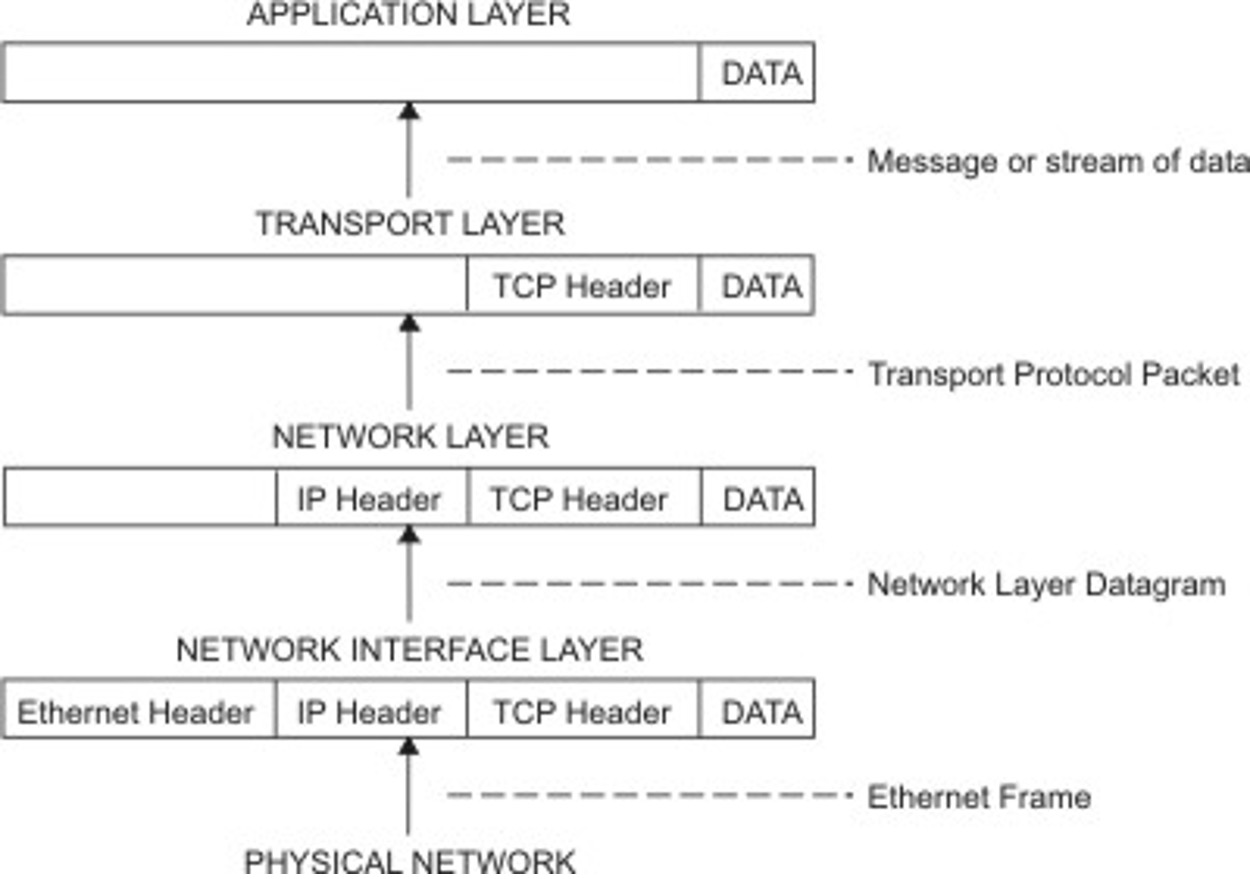

Movement of information from host to application in a TCP/IP protocol. From https://www.ibm.com/docs/en/aix/7.1?topic=protocol-tcpip-protocols.

The differences in network protocols complicated communication between networks. To remedy this, these differences were masked by using an internetwork protocol shared by all networks. The resulting protocol, developed by Cerf and Kahn and called the Transmission Control Program (1974), solved the fundamental problems of internetworking. The protocol was later split into two parts, namely the Transmission Control Protocol (TCP), and the Internet Protocol (IP), in order to separate the routing and transmission control functions. TCP/IP was used to connect a variety of networks including ARPANET, the National Aeronautics and Space Administration (NASA) network, and the National Science Foundation (NSF) network. TCP/IP was also installed on major computer systems of the European Organization for Nuclear Research (CERN). Tim Berners-Lee, a computer scientist at CERN, would later develop the global World Wide Web project along with the uniform resource locator (URL) system of global unique identifiers, the Hypertext Markup Language (HTML), and the Hypertext Transfer Protocol (HTTP).

2000-2020

2000s marked rapid technological advancements that led to a widespread use of home computers and the Internet, more varied functionality of personal devices, and supercomputers. These trends would further accelerate in 2010s, posing new challenges for computer scientists working on data structures and algorithms, computation theory, programming languages, computer architecture, distributed computing, databases, and computer security.

Interdisciplinary fields such as bioinformatics or quantum computing gained more attention. In particular, researchers are working on the generalisation of classic logic that would be applicable to quantum computers including major algorithms such as Shor’s and Grover’s algorithms, implementation of quantum gates, and demonstration of quantum computers with a small number of qubits. The number of qubits has been increasing rapidly from 3 qubits in 2000 to 12 qubits in 2006, with the most recent record being a 433-qubit quantum computer, Osprey (2022), built by IBM.

Overall, recent advances computer science seem to be dominated by data analytics and Artificial Intelligence (AI) applications. Search algorithms and big data analysis have become a major focus of computer scientists as more and more data is being generated by users of personal computers and computer networks. Big Tech companies such as Google, Amazon, and Facebook are now heavily relying on developing algorithms to analyse large amounts of unstructured data. A key innovation in computer science is deep learning, with its potential highlighted in a 2012 ImageNet competition when a convolutional neural network substantially outperformed competing algorithms in recognising images. It is no wonder that right now AI research is one of the most prominent trends in computer science.

Trends in research

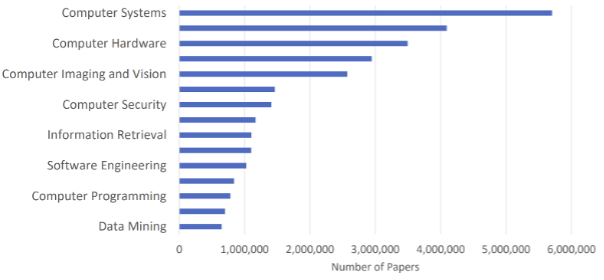

Recent scientometric research provides some insight on major trends in computer science. Over the last decade, research on computer systems, computer hardware, and computer imaging and vision has dominated the academic landscape.

Distribution of the research areas in terms of relevant papers. Source: Dessí, D., Osborne, F., Reforgiato Recupero, D., Buscaldi, D., & Motta, E. (2022). CS-KG: A large-scale knowledge graph of research entities and claims in computer science. In International Semantic Web Conference (pp. 678-696). Springer, Cham.

Analysis of more recent trends shows that AI, its applications in image processing and Natural Language Processing (NLP), bioinformatics, cybersecurity, and robotics are among the most prominent directions of research in computer science.

AI and machine learning (ML) focus on making computers smarter. ML achieves this by allowing computers to learn on their own through pattern recognition. Key research areas in AI and ML include decision support systems, cellular automata, Bayesian methods, genetic algorithms, cognitive systems, image and natural language processing, and swarm intelligence.

By now, the use of ML has become ubiquitous. Among its major uses are online platforms and social media, self-driving cars, virtual personal assistants, recommendation and spam filter systems, speech recognition systems, medical diagnostics, and stock market trading. Recent surge in AI-generated art greatly illustrates why AI continues to be a major field of interest for computer scientists. Users are able to simply type words describing what they would like to see into the search bar of an AI generator such as DALL-E or Stable Diffusion, and receive impressive art in less than 5 seconds.

Images generated using Stable Diffusion AI (“Dragon battle with a man at night in the style of Greg Rutkowski”). From https://www.businessinsider.com/ai-image-generators-artists-copying-style-thousands-images-2022-10.

In general, prominent trends in image processing include face recognition, image coding, image matching, image reconstruction, image segmentation, and digital watermarking.

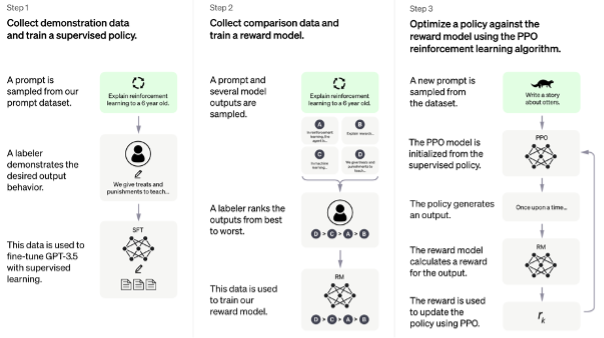

AI-based NLP is another area which is gaining more and more attention. OpenAI’s ChatGPT has been recently launched and is now regarded to be the best AI chatbot to date. The AI is able to answer follow-up questions, challenge incorrect premises, and even admit its mistakes!

Reinforcement Learning from Human Feedback: Steps for training OpenAI’s ChatGPT. From https://openai.com/blog/chatgpt/.

Specific research areas in NLP that are especially popular include information extraction, named entity recognition, natural language understanding, semantic similarity, sentiment analysis, syntactic analysis, and word segmentation.

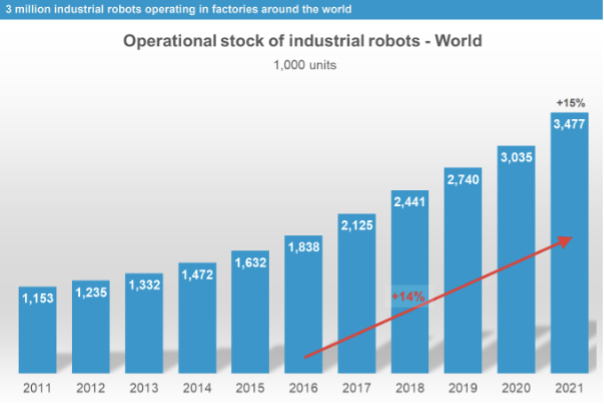

A prominent trend in computer science research is bioinformatics. There is a growing need to translate biological data into knowledge, and bioinformatics attempts to achieve that by employing computer science, information engineering, and mathematics to analyse biological data. Major research areas include biocommunications, biofeedback, computational biology, and genetic analysis, with such applications as biomarker discovery, software for elucidation of protein structures, and next generation sequencing. Robotics is another interdisciplinary field relying on computer science. Robots are being used in hospitals, packaging and food delivery, and assembly. Recent reports suggest that more than 3.5 million industrial robots are currently in use.

Operational stock of industrial robots. From https://ifr.org/downloads/press2018/2022_WR_extended_version.pdf

Governments and Big Tech companies are investing more and more resources in cybersecurity. Government bodies and social platforms operate in hyper-connected networks which potentially risks exposing sensitive data to malicious actors. This explains why more research is being done in such areas as cryptanalysis, cryptography, block ciphers, encryption, secret sharing, and security protocols.

Overall, computer science has come a long way from cumbersome and narrowly specialised mechanical machines to devices and algorithms that permeate our everyday life. Recent evolution of computer science has been shaped by key advances in its history such as development of personal computers, invention of the Internet, and research on deep learning. Future research in computer science will continue to be guided by the needs of individuals, businesses, governments, and other research fields.